Just because your AI is "smart" doesn't mean it should be trusted.

GenAI is impressive. Agentic AI? Even more so. It can route tasks, use MCP servers or call APIs, make "decisions," and increasingly act as an interface between your systems/data and your users (or yourself). But here's the problem: we're increasingly tempted to give these agents god-mode access because they seem intelligent.

But the reality is that most agents today are still little more than very confident interns. They don't understand nuance, they don't question instructions, and they'll happily take candy from strangers but now candy can be hiding a prompt injection in something like a calendar invite. Yikes!

Real-World Threat

Yes, this is real. In a recent exploit, an attacker used acalendar event descriptionto inject instructions into an AI assistant, leading it to exfiltrate sensitive user data. No zero-day needed. Just a well-placed sentence and an over-permissioned agent.

3 Mental Models to Apply

1. Every Agent Is a Risk Multiplier

If a prompt can change behavior, then every user input is potentially malicious. Treatagents as dynamic execution environments, not passive assistants.

2. Least Privilege Is Now Dynamic

The principle of least privilege is a cornerstone of modern security: only give systems the minimum access they need to do their job. But in the age of agentic AI, the "job" is no longer fixed — it's determined at runtime.

A traditional microservice might always need access to Service A and B, but an AI agent might only need access to Service B when the user asks it to perform a specific function, and even then, only within a narrow context (like a single record or a limited time window). Anything more is over-permissioning.

What Dynamic Least Privilege Looks Like

- Time-scoped credentials

- Purpose-based access requests

- Composable scopes

Anti-patterns to Avoid

- Static API keys shared across multiple agent tasks

- Long-lived tokens stored in memory across sessions

- Overbroad OAuth scopes like 'full_access' granted at install time

If you can't fully predict what your agent might do, then the least you can do is limit the blast radius when (not if) your agent is manipulated (or given vague instructions and loose permissions). Agents aim to complete their goals, but not necessarily follow strict guidelines if they conflict with their goals.

3. Observability Logging

You're not just tracking function calls, you're tracking intent. Prompt traces, input history, and model output should all be auditable. Perhaps prompt signing would be a good start.

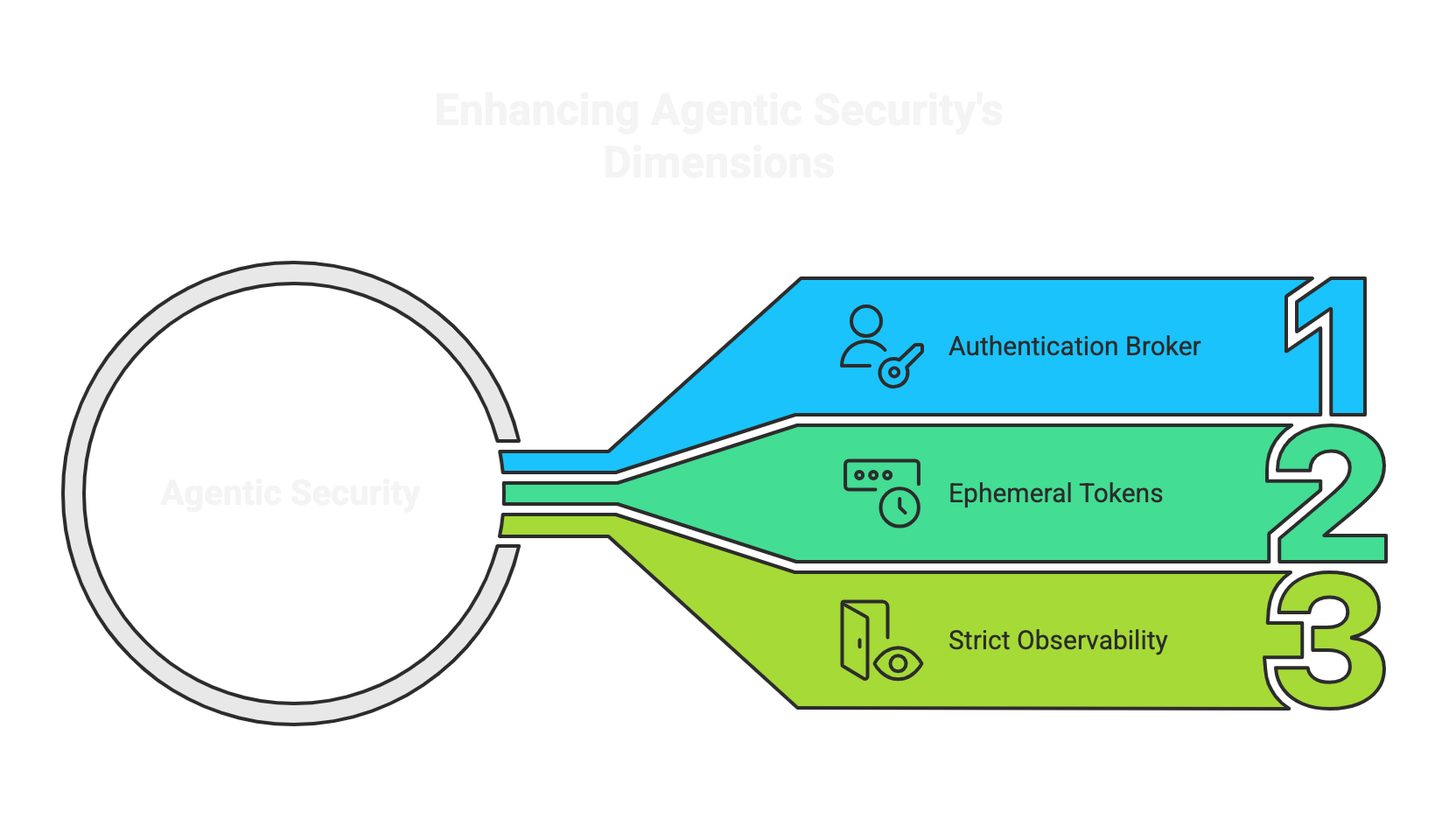

3 Tools to Actually Enforce This

Identity Abstraction (AuthN)

Use tools like OAuth + Service Identity (e.g. SPIFFE, AWS IAM Roles Anywhere, Workload Identity Federation in GCP) to give agents their own credentials, not your own or your app's.

Request-scoped Authorization (AuthZ)

Gate every API call or internal action through fine-grained access control (think Oso, Cedar, or OpenFGA). Pair this with per-request context. This is easier said than done, but it's a good goal.

Suspicious Activity Observability

Deploy agent-aware observability tools (e.g., Langfuse, OpenTelemetry for LLMs, Arize for RAG/Agents) to detect deviations from expected behavior.

Conclusion

Bottom Line

Giving AI agents broad, persistent access is like handing your intern the AWS primary credentials and telling them to "figure it out." You can use GenAI and Agents to accelerate workflows, automate decisions, and support your users, but only if your architecture embraces zero trust, not zero oversight.

Don't let a calendar invite bring down your system.